Чтобы прочитать мои сказки и стихи щелкните мышкой по обложке книги.

Сказки, фэнтези для детей 5-12 лет, новые, добрые и познавательные, Экологическое воспитание. Тексты, картинки, видео. Жду отзывов, рецензий. Ищу издателей в СНГ. Автор из Алматы.

Всё, что я пишу для детей, сделает их умнее, добрее и не займет понапрасну их время.

Клятва

Не утаю признания:

Земле клянусь, Земляне, я

Бороться за ее рассвет

На тысячи грядущих лет.

Перо мое орудие.

На бой иду с ним, люди, я,

И свой упорный скромный труд

Представлю вам на строгий суд.

Уважаемые друзья, представляю вашему вниманию сборник философских цитат и размышлений современных писателей «Новые времена – новые мысли», изданный в конце 2024 года Интернациональным союзом писателей. В нём опубликована моя статья «Как тебе не стыдно, человечество?

Эти стихи я написала очень давно в молодости и до сих пор не изменяю себе.

Главная цель всех моих книжек сделать мир лучше.

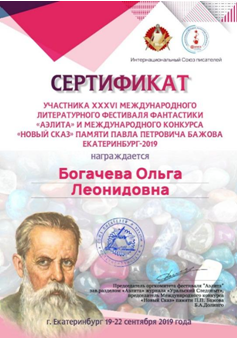

Я - бывший издатель на пенсии сама выполнила допечатную подготовку и издала все  свои книги тиражами по 100 экземпляров на русском и две на казахском для демонстрации на международных книжных выставках. «Шелковый путь» в 2015 и 2017 годах. Имею дипломы этих выставок.Союзом писателей в 2019 году награждена Сертификатом участника МК прозы фестиваля «Аэлита-2019». Это был ХХХV! Международный литературный фестиваль фантастики «Аэлита». Я представила свой научно-фантастический рассказ «Мамонтенок из пробирки». А также награждена Сертификатом участника Международного конкурса «Новый сказ» памяти Павла Петровича Бажова.

свои книги тиражами по 100 экземпляров на русском и две на казахском для демонстрации на международных книжных выставках. «Шелковый путь» в 2015 и 2017 годах. Имею дипломы этих выставок.Союзом писателей в 2019 году награждена Сертификатом участника МК прозы фестиваля «Аэлита-2019». Это был ХХХV! Международный литературный фестиваль фантастики «Аэлита». Я представила свой научно-фантастический рассказ «Мамонтенок из пробирки». А также награждена Сертификатом участника Международного конкурса «Новый сказ» памяти Павла Петровича Бажова.

Информация об авторе Ольге Богачёвой и её книгах для детей 5-12 лет, а также для взрослых

Ольга Леонидовна Богачёва член Союза журналистов Республики Казахстан с 1996 года. Родилась 13 декабря 1946 года в городе Татарске Новосибирской области РФ. В Казахстан приехала по распределению. Живет и работает здесь с 1967 года, несколько лет своей журналистской деятельности она посвятила экологическим программам на Казахстанском радио и телевидении. Работала корреспондентом и главным редактором целого ряда печатных СМИ. В 2015 и 2017 годах она награждена дипломами VIII и 1Х Международных книжных выставок «ПО ВЕЛИКОМУ ШЕЛКОВОМУ ПУТИ» за серию детских сказок на русском и казахском языках.

Истории Казахстана, его природе посвящен сборник стихов «На солнечном ветру».

Активная позиция автора, как человека обеспокоенного за судьбу природы нашей планеты отражается и в её литературных произведениях для детей. Экологическое воспитание подрастающего поколения основное направление её сказок. Именно этой теме посвящается её приключенческая сказка «Змей Горыныч, Ваня и Бабуся Ягуся в лесу», вышедшая в свет в 2010 году, и сказка «Кот Мур и его команда» для детей дошкольного и младшего школьного возраста, увидевшая свет в 2015 году, а также фантастическая повесть «Приключения динозавров в России» - 2017 год и её новый научно-фантастический рассказ «Мамонтенок из пробирки» -2018 год.

«Змей Горыныч, Ваня и Бабуся Ягуся в лесу», в этой  сказке простым языком в доступной для детей старшего дошкольного и младшего школьного возраста в увлекательной форме рассказывается о полезных и вредных растениях, грибах, ягодах. Дети учатся видеть красоту природы, любить, беречь и защищать её от варварского к ней отношения.

сказке простым языком в доступной для детей старшего дошкольного и младшего школьного возраста в увлекательной форме рассказывается о полезных и вредных растениях, грибах, ягодах. Дети учатся видеть красоту природы, любить, беречь и защищать её от варварского к ней отношения.

Ваня хочет стать лесным доктором, чтобы лечить зверей, как Айболит. Баба Яга обучает его народной мудрости. Она главный врач в лесной больнице. Старый добрый Змей Горыныч ей помогает. Он давний друг Вани, недовольный шумом и дымом больших городов. Змей Горыныч собирает по лесу бутылки и консервные банки, брошенные туристами. Баба Яга объясняет Ване, что брошенная в лесу бутылка линзой служит.

Она концентрирует солнечные лучи в один жаркий луч, от которого лес загорается. Медведь Михайло Потапыч открыл свое дело производит из этих консервных банок тюбики для зубной пасты. зубную пасту делает из березовой золы и меда, чтобы все звери могли чистить зубы по утрам и вечерам.

Все лесные приключения Вани заканчиваются веселым лесным праздником, куда поджав хвост, пришел даже серый Волк попросить прощения у всех кого обижал в лесу. Звери веселятся, поют и танцуют на лужайке возле избушки Бабы Яги, ведь их всех здесь вылечили.

А потом Змей Горыныч отвозит Ваню домой к маме. Ваня показывает маме фотографии лесных цветов на своем сотовом телефоне. Он знает, что нельзя рвать цветы в лесу. Иначе их там совсем не будет. Надо беречь природу!

«Кот Мур и его команда» - сказка для детей дошкольного и младшего школьного возраста. В ней рассказывается о самых распространенных насекомых вредителях лесов, полей, садов и огородов. Волшебный кот Мур вместе со своей хозяйкой первоклассницей Аишей учится читать и писать, и даже мыть посуду. Он давно научился ходить на двух ногах и танцевать.

самых распространенных насекомых вредителях лесов, полей, садов и огородов. Волшебный кот Мур вместе со своей хозяйкой первоклассницей Аишей учится читать и писать, и даже мыть посуду. Он давно научился ходить на двух ногах и танцевать.

Он дружит с большой собакой Авой. Все трое очень любят проводить лето на даче у бабушки. И вот однажды, приехав отдыхать к бабушке, они увидели â огород бабушки и соседний лес в опасности. Колорадские жуки уничтожают все, что растет в огороде, а саранча напала на лес. Кот Мур с друзьями вступает в борьбу с вредителями лесов и огородов и побеждает.

Ему помогают лесные птицы и лесная авиация. Дети постигают мудрость: ничего, что ты маленький главное не бояться, делать добрые дела и обязательно победишь любого врага.

«Приключения динозавров в России» - фэнтези для детей среднего школьного возраста . Из книги дети получают много новой интересной информации о  достижениях ученых в области клонирования организмов, животном и растительном мире различных климатических зон России, о самоцветах Урала, о солнечном камне западного побережья России янтаре.

достижениях ученых в области клонирования организмов, животном и растительном мире различных климатических зон России, о самоцветах Урала, о солнечном камне западного побережья России янтаре.

Автор использует мифологические и сказочные мотивы. Действующие персонажи, кроме молодых ученых Коли и Васи, клонов-динозавров Дин-Димы и Дин-Дины, пса Зевса, инопланетяне и известные сказочные герои - капитан Врунгель, Хозяйка Медной горы, Данила-мастер, бывшая Баба Яга баба Маня, подарившая путешественникам ковер-самолет и скатерть-самобранку.

Хозяйка Медной горы открывает читателям свой секрет: тайные силы Земли способны помочь только человеку доброму и работящему. Такому, например, как Данила-мастер.

На атлантическом побережье России Кит и морская чайка обращаются к динозаврам за помощью. Они хотят, чтобы динозавры попросили людей прекратить всяческие действия, приводящие к загрязнению Мирового океана и воздушного бассейна планеты Земля, не то им самим не поздоровится.

Инопланетяне с помощью динозавров отправляют землянам свое послание. Они советуют жителям Земли меньше покупать своим детям игрушечные пистолеты, автоматы, танки, а больше развивающие игрушки и книги, чтобы дети росли умными и добрыми. «Напомните людям про клонированную овечку Долли, которая была злой и рано умерла», говорится в послании с планеты Нибиру. «Скажите людям, что они будут жить долго и счастливо на Земле, если перестанут воевать друг с другом и научатся договариваться обо всем. Ведь вначале было слово».

Достоинство этого произведения Ольги Богачевой еще и в гармоничном синтезе увлекательной приключенческой истории, рассказанной легким, слегка ироничным языком, и научно-достоверной познавательности мира.

«Мамонтенок из пробирки» -научно-фантастический рассказ для детей среднего школьного возраста написан в 2018 году

Здесь дети знакомятся с научными гипотезами о происхождении жизни на Земле.

Они узнают о том, какие находки из вечной мерзлоты представлены на экспозициях музея мамонта в Сибири, совершают путешествие по страницам Красной книги. Им разъясняется, почему надо беречь растительный и животный мир планеты Земля.

Мамонтенка, выращенного из искусственного эмбриона в пробирке, украли марсиане.  Вражда и войны между племенами марсиан превратили Марс в безжизненную пустыню. И чтобы восстановить бывшее биологическое многообразие животных, марсиане воруют их на Земле. Но мамонтенок достояние землян. Погоня. Марсиане без боя отдают мамонтенка на марсе его нечем кормить.

Вражда и войны между племенами марсиан превратили Марс в безжизненную пустыню. И чтобы восстановить бывшее биологическое многообразие животных, марсиане воруют их на Земле. Но мамонтенок достояние землян. Погоня. Марсиане без боя отдают мамонтенка на марсе его нечем кормить.

И вот мамонтенок возвращен. Два марсианина плачут в аэропорту Сибири. Им жаль отдавать мамонтенка. У них опускаются уши. Это у марсиан обозначает стыд, ведь воровать плохо. Однако все завершается мирно. Молодые ученые обещают подарить марсианам пару мамонтов, когда на Земле их будет много.

Во всех своих произведениях, Ольга Богачёва справляется с основной задачей поэта и писателя: сеять, разумное, доброе, вечное.